What next, a DOS version?? [Free tool and source code to temporarily prevent a computer from entering sleep mode - 32-bit and 64-bit versions now support Windows XP!]

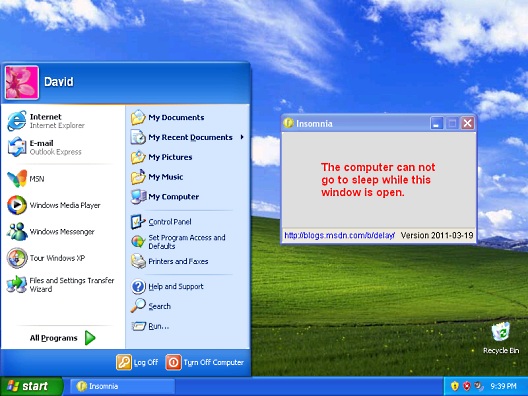

I used .NET 3.5 to write the first version of Insomnia about a year and a half ago. Its purpose in life is to make it easy for anyone to temporarily prevent their computer from going to sleep without having to futz with system-wide power options every time. Insomnia did its job well and people used it in ways I didn't expect: there were requests to allow the window to be minimized to the notification area, so I posted an update a few months later which allowed that. As a result, some people leave Insomnia running for extended periods of time (e.g., many days) and seemed likely to benefit from a version that didn't include the overhead of the .NET Framework, so I created new, native-code 32- and 64-bit versions of Insomnia late last year. These new versions did exactly what they were intended to and were also well received - but there's a catch...

Every couple of weeks or so, Insomnia gets featured by one of those "cool app of the day" sites (which is awesome, so thanks for that!). I never know when it's going to happen, but I can tell exactly when it does because I suddenly get a flurry of comments telling me the native-code versions don't run on Windows XP...

Aside: Many of you may be too young to remember; Windows XP is an operating system Microsoft released about ten years ago - practically an eternity in computing terms! And yet, a lot of people are still running XP...;)

Although I didn't set out to not support Windows XP, I also didn't make a specific effort to support it - and as the saying goes, "if you don't test it, it won't work". Well it didn't work, so I investigated and summarized my findings in a reply on my blog:

I've looked into the Windows XP (SP3) issue just now - the "ordinal 380" error is due to the fact that the LoadIconMetric API isn't supported on OSes prior to Windows Vista. It's easy enough to use the more general LoadIcon API and then native Insomnia starts successfully on XP - but it doesn't show any text. The missing text is because the LM_GETIDEALSIZE message isn't supported prior to Vista, either - and in this case the simpler LM_GETIDEALHEIGHT message isn't a direct substitute. What's more, the current icon doesn't seem to be recognized by XP, either (it shows the generic application icon in Explorer and nothing in the tray). Neither of these issues (text measurement and icon) are overly difficult to fix, but they're also not trivial and I'm not sure how worthwhile it is to spend time and effort to support native Insomnia on Windows XP when the .NET version is reported to work just fine...

That said, I'm open to input here. If a lot of people think this is useful, I'll look into doing the work - but if everyone's happy to use the .NET version on XP, I'm happy to let them.

:)

For what it's worth, I don't recall anybody coming back with a compelling reason why XP support is necessary - but after getting another round of XP feedback recently, I decided it was something I should do simply because it comes up so often. Therefore, I'm happy to announce that Insomnia's native 32-bit and 64-bit versions now support Windows XP!

Note: With today's update (and in lieu of feedback to the contrary), the native versions of Insomnia are believed to run everywhere the .NET version does. Because there's no functional difference between the native- and managed-code implementations, I'll probably deprecate the .NET version soon. (Please don't get me wrong: I love the significant productivity benefits of using .NET! They're just moot here because I'm already doing the work to maintain the native implementations.)

Notes:

-

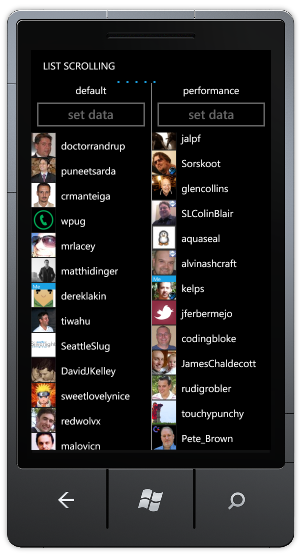

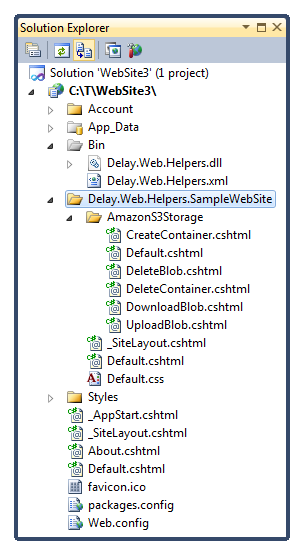

By far the biggest change with this release is the replacement of the SysLink control with one I wrote called

IdealSizeStatic. Recall from the previous post that there were two things I liked about SysLink: its ability to return an "ideal size" and its ability to render hyperlinks. Both of these features worked like I wanted, but the lack of support for LM_GETIDEALSIZE on XP was a deal-breaker. When creatingIdealSizeStatic, I kept things as simple as possible while also being consistent with howSysLinkoperates (ex: the use of WM_CTLCOLORSTATIC and WM_SETFONT) just in case I decide to switch back some day.IdealSizeStaticends up being a pretty general-purpose control that offersWM_GETIDEALSIZEfor querying the bounds (width and height) of its text - which is handy for the WPF-like layout pass I implemented in Insomnia.Despite my goal of keeping

IdealSizeStaticas simple as possible, I didn't want to give up on the hyperlink functionality Insomnia already used, so I did the work to support that scenario. Specifically, if the first character of its window title is '_',IdealSizeStaticassumes it's showing a link, strips off the '_' prefix, and renders the control text in blue. Assuming WS_TABSTOP has also been set, it will show a focus rectangle when relevant and respond to mouse left-button clicks and space bar presses by calling ShellExecuteEx to open the link in the user's preferred web browser.IdealSizeStaticwon't win any awards for "Win32 control of the year", but it seems to do just enough to be an adequate replacement forSysLink.:) -

Having purged the

SysLinkcontrol, Insomnia no longer has a dependency on the COMCTL32 common control DLL and the corresponding code to initialize it and incorporate the necessary manifest has been removed. -

The other problem with running on XP was the use of the LoadIconMetric API to retrieve the application icon for the notification area. Fortunately, it's pretty simple to fall back to the LoadImage API instead - though it doesn't offer the same "auto-scaling" magic the original function does.

-

Speaking of icons, one curious problem with XP was that Windows Explorer showed the default application icon instead of the custom one embedded in the Insomnia executable. This ends up being because the custom ICO file generator I wrote/use outputs icon images in the 32-bit alpha-blended PNG format and that format isn't supported on Windows XP. This time around, I've also embedded 16x16 and 32x32 icons with the older 24-bit image+mask format. Because this type is recognized by XP, the icon shows up correctly.

Aside: Unfortunately, XP still has a bit of trouble rendering the bottom-most pixels of the 32x32 icon in the application's property page. But that property page seems to have a variety of troubles with icons - there was a similar issue with the previous version even under Windows 7!

-

I've also fixed a couple of minor issues I noticed along the way - none of which should matter much in practice. The new version of the 32-bit and 64-bit builds of Insomnia is 2011-03-19. The version number of the .NET build has not changed and remains 2010-01-29.

I initially put off supporting XP because I figured very few people still used it. However, the steady stream of feedback from XP users finally convinced me there's enough interest to make the effort worthwhile. Windows 7 and Windows Vista users won't see functional differences with this release, but they will benefit from the slightly reduced footprint that comes from breaking the ties to COMCTL32.dll. My IdealSizeStatic control isn't a perfect replacement for the SysLink control, but it gets close enough - and does so without being overly complex.

Windows XP users: I hope you like the new support. Thanks for your patience!

All you need to install to get going is the

All you need to install to get going is the