"If I have seen further, it is by standing on the shoulders of giants" [An alternate implementation of HTTP gzip decompression for Windows Phone]

The HTTP protocol supports data compression of network traffic via the Content-Encoding header. Compressing network traffic is beneficial because it reduces the amount of data that needs to be transmitted over the network - and sending fewer bytes obviously takes less time! The tradeoff is that it takes a bit of extra work to decompress the data, but because the bottleneck is nearly always network, HTTP compression should be a win pretty much every time. And when you're dealing with comparatively slow, unreliable networks like the ones used by cell phones, the advantages of compression are even more significant.

Aside: Because most networks are lossy and transfer data in packets, sending just one fewer byte can be meaningful if it reduces the number of packets.

You might reasonably expect that enabling compression for Windows Phone web requests is as simple as setting the HttpWebRequest.AutomaticDecompression property available since .NET 2.0. Unfortunately, this property is not supported by current versions of the Windows Phone platform, so it's up to application developers to add HTTP compression support themselves. Consequently, a number of home-grown solutions have cropped up.

One popular example is Morten Nielsen's GZipWebClient. Naturally, Morten didn't want to implement his own compression library, so he's using SharpZipLib to do the heavy lifting. This is a great example of reuse, but it's important to note that SharpZipLib is licensed under the GNU General Public License (GPL) and some developers won't be comfortable with the implications of using GPL code in their own project. (For more on what those implications are, the Wikipedia article for GPL has a fairly detailed overview which includes mention of ambiguities around the definition of "derivative works".)

Aside: Of course, there are other options. Another popular library is DotNetZip which claims to be under the MS-PL (Microsoft Public License), the same permissive "do pretty much whatever you want with the code" license I use for this blog. However, a brief look at the license files it comes with suggests maybe that's not the whole story because there are at least four other licenses called out there.

As you can probably tell, I'm not the biggest fan of lawyers, ambiguity, or unnecessary risk [

The basic idea is to create a bit of code that customizes the user's WebClient/HttpWebRequest to add the Accept-Encoding header. Once that's in place, servers that support gzip automatically compress their response bodies. To decompress the downloaded response on the phone, another bit of code is used to wrap the compressed response stream in a ZIP archive and hand it off to Application.GetResourceStream which does the heavy lifting and provides access to the decompressed response stream. What's nice about this technique is that the decompression implementation is part of the Silverlight framework - meaning applications don't need to pull in a bunch of external code or increase their size!

I wanted this to be easy, so I've created a WebClient subclass and all you have to do is change this:

client = new WebClient();

Into this:

client = new GzipWebClient();

After which your application's HTTP requests will automatically benefit from gzip compression!

Of course, some people like to work a little closer to the metal and I've done a similar thing for the HttpWebRequest/HttpWebResponse crowd. Change this:

request = WebRequest.CreateHttp(_uri); request.BeginGetResponse(callback, request); // ... response = (HttpWebResponse)request.EndGetResponse(result); stream = response.GetResponseStream(); // ...

Into this:

request = WebRequest.CreateHttp(_uri); request.BeginGetCompressedResponse(callback, request); // ... response = (HttpWebResponse)request.EndGetResponse(result); stream = response.GetCompressedResponseStream(); // ...

And you're set!

Great, that's all well and good, but does any of this really matter? Less is clearly more, but is there actually a noticeable difference when using gzip? I wanted to answer that question for myself, so the sample application for this post not only exercises the code I've written, it also acts as a simple, real-time performance report! The sample makes continuous requests for http://microsoft.com/ using WebClient, standard HttpWebRequest/HttpWebResponse, my custom GzipWebClient, my Compressed helpers for HttpWebRequest/HttpWebResponse, and (optionally) Morten's GZipWebClient (for comparison purposes). As the data is collected, it's charted via the Silverlight Toolkit's Data Visualization library (which I've previously shown running on Windows Phone).

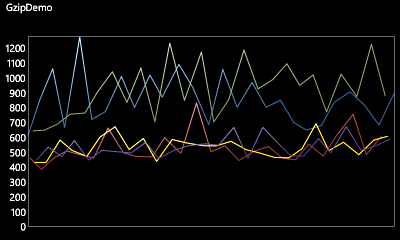

Here's what it looks like running in the emulator using a wired connection:

In the chart above, you can clearly see which requests are using gzip and which aren't! Not only are the gzip-enabled requests noticeably faster on average, they're nearly always faster even in the worst case. What's more, while there's variability for both kinds of requests (that's part of how the internet works), the delta between best/worst times of gzip-compressed requests is smaller (i.e., they're more consistent).

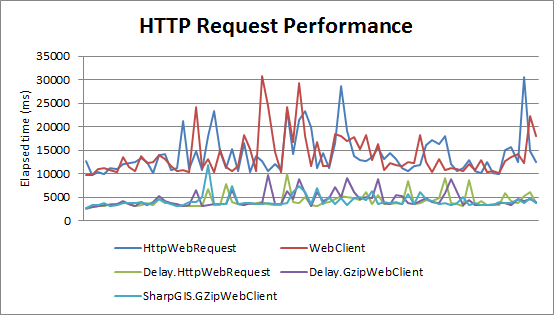

That's pretty compelling data, but the real benefit comes when the phone's data connection is used. Here's the output from the same app using the cell network (via Excel this time):

All our previous observations remain true - and are even more pronounced in this scenario. Gzip-compressed HTTP requests are significantly faster (taking less than half the time) and more predictable than traditional requests for the same data.

Awesome!

Aside: Based on the chart above, it seems reasonable to claim all three gzip solutions are equivalent. That said, if you squint just right, it looks like usingHttpWebRequestis - on average - marginally quicker than usingWebClient(as you'd expect;WebClientcallsHttpWebRequestunder the covers). Additionally,SharpGIS.GZipWebClientappears to be - on average - very slightly quicker thanDelay.GzipWebClient(which is also not surprising when you consider the hoops my code jumps through to avoid the external dependency). Bear in mind, though, that these differences only really show up at the millisecond level, and seem unlikely to be significant for most real-world scenarios.

OR

Notes:

-

Using the code I've authored is quite easy:

-

If you want to use

GzipWebClient, simply add theGzipWebClient.csandGzipExtensions.csfiles to your project, reference theDelaynamespace, and replace any instances ofWebClient. -

If you're a

HttpWebRequest/HttpWebResponsefan, you only need to addGzipExtensions.csto your project, reference theDelaynamespace, and invoke the extension methodsHttpWebRequest.BeginGetCompressedResponseandHttpWebResponse.GetCompressedResponseStream(as shown above).

-

-

By default, the sample application does not include Morten's

GZipWebClientbecause I don't want to get into the business of distributing someone else's code. However it's easy to include - the following comment inMainPage.xaml.cstells you how:// For an additional scenario, un-comment the following line and install the "SharpGIS.GZipWebClient" NuGet package //#define SHARPGIS

The code to use that assembly is already in the sample application; you just need to provide the bits if you want to try it out.

:) -

The implementation of the gzip-to-ZIP wrapper is fairly straightforward: it reads the gzip-compressed response from the server, wraps it in a stream that represents a valid ZIP archive with a single file compressed using the deflate algorithm which both specifications share, and hands that off to the Silverlight framework to return a decompressed stream for that file. To be clear, the compressed data isn't altered - it's simply re-packaged into a format that's more useful.

:) If you're interested in the specifics, you'll probably want to familiarize yourself with the gzip specification and the ZIP file specification and then have a look at the code. -

There are two minor inefficiencies in my code, one of which seems unavoidable and the other of which could be dealt with.

-

The unavoidable one - and the reason I think SharpZipLib might be a smidge quicker - is that the size/checksum data for the gzip data is provided at the end of the download stream, but is needed at the beginning of the wrapper stream. If the correct values aren't used, the ZIP wrapper will be rejected - but those values are not known until the entire response has been downloaded. Therefore, it's not possible for my implementation to proactively process the data while it is being downloaded; the download must be buffered instead. A decompression library won't suffer from this limitation and ought to be quicker as a result.

-

The avoidable inefficiency is that a single copy of the input data is made when creating the ZIP wrapper stream. To be clear, this is the only time data is copied (I've structured the code so there isn't any buffer resizing, etc.), but it's not technically necessary because the ZIP wrapper stream could operate directly from the download buffers. I may make this improvement in the future, but expect the performance difference to be negligible.

-

Compressing HTTP traffic is a pretty clear win for desktop applications - and an even bigger benefit for mobile apps communicating over slower, less reliable cellular networks. The Windows Phone platform doesn't make using gzip easy, but it's possible if you're sufficiently motivated. For people who aren't intimidated by licenses, there are already some good options for gzip - but for those who aren't comfortable with what's out there, I'm offering a new option under one of the most permissive licenses around.

I hope you find it useful!