Back to backup [Revisiting and refreshing my approach to backups]

A topic that comes up from time to time is how people deal with backing up their data. It's one of those times again, so I'm sharing my technique for the benefit of anyone who's interested. My previous post on backup strategy was written over 11 years ago (!), and it's surprising how much is still relevant. This post expands on the original and includes my latest practices. Of course, we all have different priorities, restrictions, and aversion to data loss, so what I describe here doesn't apply universally. Just take whatever's relevant and ignore the rest. :)

Considerations

Dependability - Hardware eventually fails. Things with moving parts tend to die sooner, but even solid-state drives have problems in the long run. Sometimes, the risk of failure is compounded because a storage device is so tightly integrated with the computer that it can become unusable due to failure of an unrelated component. And even if hardware doesn't break on its own, events like a lightning strike can take things out unexpectedly.

Accidental deletion or overwrite - As careful as one might be, every now and then it's possible to accidentally delete an important file. Or maybe just open it, make some inadvertent edits, and absentmindedly save them. This is a scenario many backup strategies don't account for; if an unwanted change to the original data is immediately replicated to a backup, it can be difficult to recover from the mistake.

Undetected corruption - A random hardware or software failure (or power loss) can invisibly result in a garbled file, directory, or disk. The challenge is that corruption can go undetected for a long time until something happens to need the relevant bits on disk. It's easy to propagate corruption to a backup copy when you don't realize it's present.

Local disaster - Fire and tornadoes are rare, but can destroy everything in the house. Even with a perfect a backup on a spare drive, if that drive was in the same place at the time of the disaster, the original and backup are both lost. Odds of avoiding the problem are improved slightly by keeping drives in different rooms, but a sufficiently serious calamity will not be deterred.

Large-scale disaster - The likelihood is quite low, but if a flood (as a timely example) comes through the area, it won't matter how many backups are stored around the house because all of them will be underwater. The only protection is to store things offsite, preferably far away.

Security/privacy - Most people won't be the target of industrial espionage, but a nosy house guest can be just as problematic - especially so because they have prolonged access to your data. If thieves steal a computer, they can read its drives. Bank records, tax documents, source code, etc., can all be compromised if precautions haven't been taken to encrypt the original and all backups.

Bandwidth - Many people use services that store data in the cloud. With a fast-enough connection to the Internet, this works well. But typical Internet plans have limited upstream bandwidth, meaning it can take hours to upload a single video. Things catch up over the course of a few days, but the latest data can be lost if service is abruptly cut off.

Validation - In order to be sure backups are successful, it's necessary to test them - regularly. Otherwise, you might not be as well prepared as you thought. Some backup approaches are easy to verify, others not so much.

Ease of recovery - In the event of a complete restore from backup, things have probably gone very wrong and any additional hardship is of little concern. That said, having immediate access to all data is a nice perk.

Cross-platform support - Whether you prefer Windows, Mac, or Linux, you'll probably stay with your OS of choice after catastrophes strikes. But it's nice to have a backup strategy where data can be recovered from different platform. If your threat model includes a global virus that wipes out a particular operating system, cross-platform support is important.

Implementation

All drives storing data I care about are encrypted with BitLocker. This mitigates the risk of theft/tampering and means I can leave backup drives anywhere. Each backup drive is a 1 or 2 TB 2.5" external USB drive. These devices are nice because they don't need a separate power supply and they're inherently portable and resilient - especially when stored inside a waterproof Ziploc bag.

At the end of each day, I mirror the latest changes from the primary drive in my computer to a backup drive sitting next to it. This step protects against hardware failures (high risk) and this is the drive I'll take if I need to leave home in a hurry.

Every couple of months, I calculate the checksum of every file and save them to the drive. Then I mirror to the backup, calculate checksums for everything on the backup, and make sure all the checksums match. This makes sure every bit on both drives is correctly written and readable. Any files that weren't changed help detect bit rot because those checksums are stable. Once verified, I swap the backup drive with another just like it at a nearby location (ex: friend's house, safe deposit box, etc.). This protects against theft or fire (lower risk).

Once a year, I swap the latest backup drive with another drive in a different geographic region (ex: relative's house). This protects against large-scale disaster like a flood (much lower risk), so the fact that it can be up to a year out of date is acceptable.

Because I've been doing this for a while, I also have a couple of spare drives that don't get updated regularly. These provide access to even older backups and can be used to recover outdated versions of a file in the event of persistent, undetected corruption or significant operator error.

Conclusion

This system addresses the above considerations in a way that works well for me. Extra redundancy provides peace of mind and requires very little effort most of the time. Cost is low and every step is under my control, so I don't need to worry about recurring fees or vendors going out of business. Recovery is simple and my family knows how to access backups if I'm not available. Remote backup drives consume no power, can live in the back of a drawer, and can be lost or destroyed without concern.

Nota bene

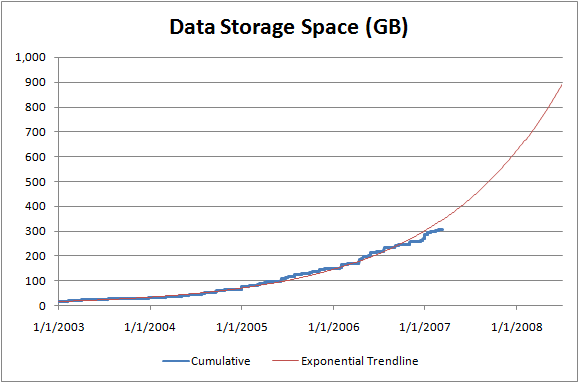

When I did the math on storage requirements a decade ago, I was concerned about capacity needs outpacing storage advances. But that didn't happen. In practice, the price of a drive with room for everything has stayed around (or below) $100. I buy a new drive every couple of years, meaning the amortized cost is minimal.