Out of hibernation [A new home and a bunch of updates for TextAnalysisTool.NET]

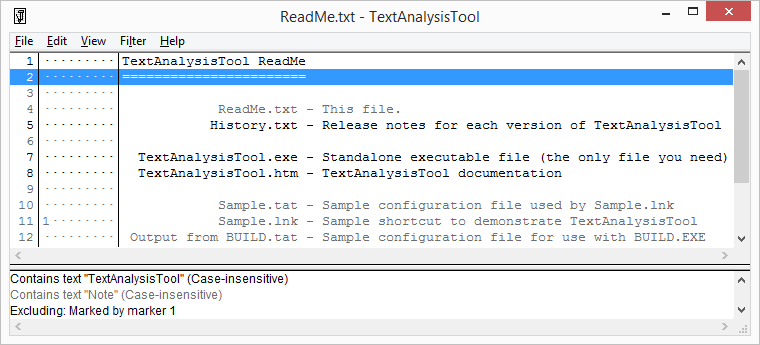

TextAnalysisTool.NET is one of the first side projects I did at Microsoft, and one of the most popular. (Click here for relevant blog posts by tag.) Many people inside and outside the company have written me with questions, feature requests, or sometimes just to say "thank you". It's always great to hear from users, and they've provided a long list of suggestions and ideas for ways to make TextAnalysisTool.NET better.

By virtue of changing teams and roles various times over the years, I don't find myself using TextAnalysisTool.NET as much as I once did. My time and interests are spread more thinly, and I haven't been updating the tool as aggressively. (Understatement of the year?)

Various coworkers have asked for access to the code, but nothing much came of that - until recently, when a small group showed up with the interest, expertise, and motivation to drive TextAnalysisTool.NET forward! They inspired me to simplify the contribution process and they have been making a steady stream of enhancements for a while now. It's time to take things to the next level, and today marks the first public update to TextAnalysisTool.NET in a long time!

The new source for all things TextAnalysisTool is: the TextAnalysisTool.NET home page

That's where you'll find an overview, download link, release notes, and other resources. The page is owned by the new TextAnalysisTool GitHub organization, so all of us are able to make changes and publish new releases. There's also an issue tracker, so users can report bugs, comment on issues, update TODOs, make suggestions, etc..

The new 2015-01-07 release can be downloaded from there, and includes the following changes since the 2013-05-07 release:

2015-01-07 by Uriel Cohen (http://github.com/cohen-uriel)

----------

* Added a tooltip to the loaded file indicator in the status bar

* Fixed a bug where setting a marker used in an active filter causes the

current selection of lines to be changed

2015-01-07 by David Anson (http://dlaa.me/)

----------

* Improve HTML representation of clipboard text when copying for more

consistent paste behavior

2015-01-01 by Uriel Cohen (http://github.com/cohen-uriel)

----------

* Fixed a bug where TAB characters are omitted in the display

* Fixed a bug where lines saved to file include an extra white space at the

start

2014-12-21 by Uriel Cohen (http://github.com/cohen-uriel)

----------

* Changed compilation to target .NET Framework 4.0

2014-12-11 by Uriel Cohen (http://github.com/cohen-uriel)

----------

* Redesigned the status bar indications to be consistent with Visual Studio and

added the number of currently selected lines

2014-12-04 by Uriel Cohen (http://github.com/cohen-uriel)

----------

* Added the ability to append an existing filters file to the current filters

list

2014-12-01 by Uriel Cohen (http://github.com/cohen-uriel)

----------

* Added recent file/filter menus for easy access to commonly-used files

* Added a new settings registry key to set the

maximum number of recent files or filter files allowed in the

corresponding file menus

* Fixed bug where pressing SPACE with no matching lines from filters

crashed the application

* Fixed a bug where copy-pasting lines from the application to Lync

resulted in one long line without carriage returns

2014-11-11 by Uriel Cohen (http://github.com/cohen-uriel)

----------

* Added support for selection of background color in the filters

(different selection of colors than the foreground colors)

* The background color can be saved and loaded with the filters

* Filters from previous versions that lack a background color will have the

default background color

* Saving foreground color field in filters to 'foreColor' attribute.

Old 'color' attribute is still being loaded for backward compatibility

purposes.

* Changed control alignment in Find dialog and Filter dialog

2014-10-21 by Mike Morante (http://github.com/mike-mo)

----------

* Fix localization issue with the build string generation

2014-04-22 by Mike Morante (http://github.com/mike-mo)

----------

* Line metadata is now visually separate from line text contents

* Markers can be shown always/never/when in use to have more room for line text

and the chosen setting persists across sessions

* Added statusbar panel funnel icon to reflect the current status of the Show

Only Filtered Lines setting

2014-02-27 by Mike Morante (http://github.com/mike-mo)

----------

* Added zoom controls to quickly increase/decrease the font size

* Zoom level persists across sessions

* Added status bar panel to show current zoom level

These improvements were all possible thanks to the time and dedication of the new contributors (and organization members):

- Mike Morante

- Uriel Cohen

- With more to come...

Please join me in thanking these generous souls for taking time out of their busy schedule to contribute to TextAnalysisTool.NET! They've been a pleasure to work with, and a great source of ideas and suggestions. I've been really pleased with their changes and hope you find the new TextAnalysisTool.NET more useful than ever!