sudo localize & make me-a-sandwich [Free PseudoLocalizer class makes it easy for anyone to identify potential localization issues in .NET applications]

I've previously written about the benefits of localization and shown how to localize Windows Phone 7 applications. The techniques I describe in that post constitute a good workflow that's just as suitable for WPF and Silverlight desktop applications! But even with good processes in place, the way localization is done in the real world has some challenges...

You see, localization can be expensive: hiring people to translate an entire application, re-testing it in the newly supported locale, fixing resulting bugs, etc.. So teams often wait till near the end of the release cycle - after the UI has stabilized - to start localizing the application. This is a perfectly reasonable approach, but there are invariably a few surprises - usually some variation of "What do you mean that string is hard-coded in English and can't be translated??". It sure would be nice if teams could do some kind of low-cost localization in order to identify - and fix - oversights like this long before they turn into problems...

Yep - that process is known as pseudo-localization. What pseudo-localization does is create alternate versions of resource strings by using different characters that look similar enough to the original English characters that text is still readable - but obviously "localized". (This is one of those "a picture is worth a thousand words" moments, so please check out the Wikipedia article or bear with me for just a moment...) Additionally, because some languages tend to have longer phrases than English (German, I'm looking at you!), there's often an additional aspect of string lengthening to simulate that and help detect wrapping, clipping, and the like.

Aside: It's important to remember that the character "translations" are chosen exclusively on the basis of how similar they look to the original character and not on the basis of linguistic correctness, cultural influence, or anything like that!

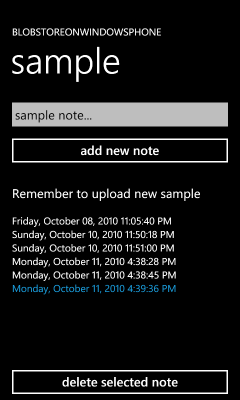

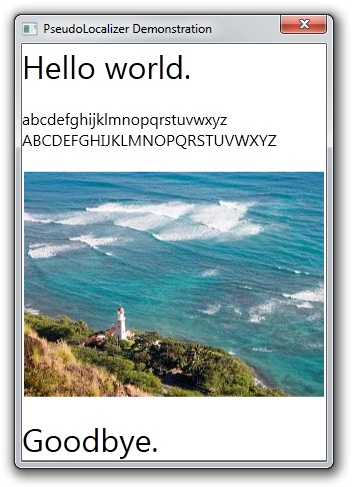

Here's the sample application I created for this post running in its normal English mode. (It's not very sophisticated, but it's good enough for our purposes.) Can you tell if all the strings are properly localizable? Are there any other localization concerns here?

Pseudo-localization isn't a new concept and there are a variety of tools out there that do a fine job of it. However, none of the ones I found during a quick search appeared to be free, simple to use, and unencumbered by restrictions (i.e., licensing, distribution, etc.). So I thought it would be fun to write my own and share it with the community as open source under the very permissive Ms-PL license.

The class I've created is called PseudoLocalizer and it automatically pseudo-localizes standard .NET RESX-based resources. Using RESX-based resources is the recommend approach for building localizable Silverlight and Windows Phone 7 applications, the recommended approach for building localizable Windows Forms applications, and also a perfectly fine approach for building localizable WPF applications.

Aside: The recommended technique for localizing WPF applications is actually something else, but I have some issues with that approach and won't be discussing it here.

I've said it's easy to use PseudoLocalizer: all it takes are three special steps:

-

Add the (self-contained)

PseudoLocalizer.csfile from the sample download to your project. -

Add the following code somewhere it will be run once and run early (ex: the application's constructor):

#if PSEUDOLOCALIZER_ENABLED Delay.PseudoLocalizer.Enable(typeof(ProjectName.Properties.Resources)); #endif

-

Add

PSEUDOLOCALIZER_ENABLEDto the list of conditional compilation symbols for your project (via the Project menu, Properties item, Build tab in Visual Studio).

Done! Not only will all localizable strings be automatically pseudo-localized, but images will, too! How does one pseudo-localize an image?? Well, I considered a variety of techniques, but settled on simply inverting the colors to create a negative image. This has the nice benefit of keeping the image dimensions the same (which is sometimes important) as well as preserving any directional aspects it might have (i.e., an image of an arrow pointing left has the same meaning after being "localized").

Aside: I've never seen image pseudo-localization before, but it seems like the obvious next step. Although many images don't need to be localized (ex: a gradient background), some images have text in them or make assumptions that aren't true in all locales (ex: thumbs up means "good"). So it seems pretty handy to know which images aren't localizable!:)

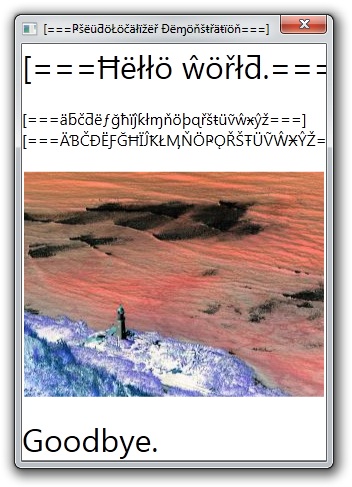

Okay, let's see how well you did on the localization quiz! Here's the sample application with PseudoLocalizer enabled:

Hum... While it's clear most of the text was correctly localizable (and therefore automatically pseudo-localized), it appears the lazy developer (uh, wait, that's me...) forgot to put one of the strings in the RESX file: "Goodbye" is hardcoded to English in the XAML.

Notes:

-

What I've described in this post works only on WPF; Silverlight has a couple of limitations that prevent what I've done from working exactly as-is. My next blog post will discuss how to overcome those limitations and use

PseudoLocalizeron Silverlight, too! -

The way

PseudoLocalizerworks so seamlessly is by examining the type of the generated resource class and using (private) reflection to swap in a custom ResourceManager class for the default one. ThisPseudoLocalizerResourceManagerworks the same as the default one for loading resources - then it post-processes strings and bitmaps to provide its pseudo-localization effects. Private reflection (i.e., examining and/or modifying the internal data of an object) is generally a bad practice - but I tend to believe it's acceptable here because the target class is simple, its (generated) code is part of the project, andPseudoLocalizeris only ever going to be used as a development tool (i.e., never in a released application). -

For readers who want a brief overview of how to use RESX-resources in WPF, here you go:

-

Set the Access Modifier to "Public" in the RESX designer so the auto-generated resource property accessors will be accessible to the WPF data-binding system.

-

Create an instance of the auto-generated RESX class (typically named "Resources") as a WPF-accessible resource:

<Window.Resources> <properties:Resources x:Key="Resources" xmlns:properties="clr-namespace:PseudoLocalizerWPF.Properties"/> </Window.Resources>

-

Create a Binding that references the relevant properties of that resource wherever you want to use them:

<TextBlock Text="{Binding Path=AlphabetLower, Source={StaticResource Resources}}"/>

-

-

Because RESX files expose images as instances of System.Drawing.Image and WPF deals with System.Windows.Media.ImageSource, I wrote a simple IValueConverter to convert from the former to the latter. (And it's part of the source code download.) Using

BitmapToImageSourceConverterfollows the usual pattern for value converters:-

Create an instance as a resource:

<delay:BitmapToImageSourceConverter x:Key="BitmapToImageSourceConverter" xmlns:delay="clr-namespace:Delay"/>

-

Use that resource in the relevant

Binding:<Image Source="{Binding Path=Image, Source={StaticResource Resources}, Converter={StaticResource BitmapToImageSourceConverter}}"/>

-

Because the cost of fixing defects increases (dramatically) as a product gets closer to shipping, it's important to find and fix issues as early as possible. Localization can be a tricky thing to get all the kinks worked out of, so it's helpful to have good tools around to ensure a team is following good practices. With its low cost, simple implementation and friction-free usage model, I'm hopeful PseudoLocalizer will become another good tool to help people localize successfully!