Absolute coordinates corrupt absolutely [MouseButtonClicker gets support for absolute coordinates and virtual machine/remote desktop scenarios]

I wrote and shared MouseButtonClicker almost 12 years ago.

It's a simple Windows utility to automatically click the mouse button for you.

You can read about why that's interesting and how the program works in my blog post, "MouseButtonClicker clicks the mouse so you don't have to!" [Releasing binaries and source for a nifty mouse utility].

I've used MouseButtonClicker at work and at home pretty much every day since I wrote it.

It works perfectly well for normal scenarios, but also virtual machine guest scenarios because mouse input is handled by the host operating system and clicks are generated as user input to the remote desktop app.

That means it's possible to wave the mouse into and out of a virtual machine window and get the desired automatic-clicking behavior everywhere.

However, for a number of months now, I haven't been able to use MouseButtonClicker in one specific computing environment.

The details aren't important, but you can imagine a scenario like this one where the host operating system is locked down and doesn't permit the user (me) to execute third-party code.

Clearly, it's not possible to run MouseButtonClicker on the host OS in this case, but shouldn't it work fine within a virtual machine guest OS?

Actually, no. It turns out that raw mouse input messages for the VM guest are provided to that OS in absolute coordinates rather than relative coordinates. I had previously disallowed this scenario because absolute coordinates are also how tablet input devices like those made by Wacom present their input and you do not want automatic mouse button clicking when using a pen or digitizer.

But desperate times call for desperate measures and I decided to reverse my decision and add support for absolute mouse input based on this new understanding. The approach I took was to remember the previous absolute position and subtract the coordinates of the current absolute position to create a relative change - then reuse all the rest of the existing code. I used my Wacom tablet to generate absolute coordinates for development purposes, though it sadly violates the specification and reports values in the range [0, screen width/height]. This mostly doesn't matter, except the anti-jitter bounding box I describe in the original blog post is tied to the units of mouse movement.

Aside: The Wacom tablet also seems to generate a lot of spurious [0, 0] input messages (which are ignored), but I didn't spend time tracking this down because it's not a real use case.

In the relative coordinate world, ±2 pixels is very reasonable for jitter detection. However, in the absolute coordinate world (assuming an implementation that complies with the specification) 2 units out of 65,536 is insignificant. For reference, on a 4K display, each screen pixel is about 16 of these units. One could choose to scale the absolute input values according to the current width and height of the screen, but that runs into challenges when multiple screens (at different resolutions!) are present. Instead, I decided to scale all absolute input values down to a 4K maximum, thereby simulating a 4K (by 4K) screen for absolute coordinate scenarios. It's not perfect, but it's quick and it has proven accurate enough for the purposes of avoiding extra clicks due to mouse jitter.

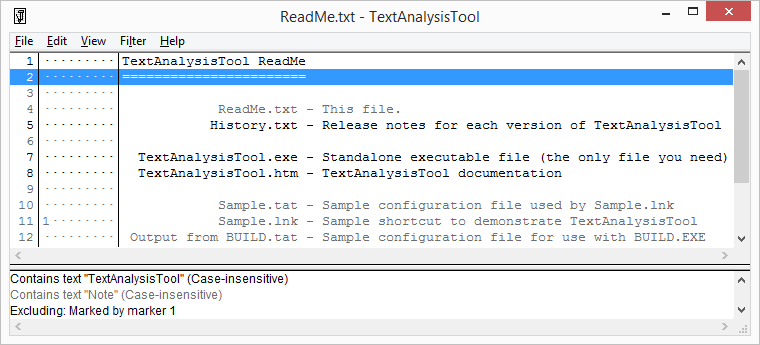

I also took the opportunity to publish the source code on GitHub and convert from a Visual Studio solution over to a command-line build based on the Windows SDK and build tools (free for anyone to download, see the project README for more). Visual Studio changes its solution/project formats so frequently, I don't recall that I've ever come back to a project and been able to load it in the current version of VS without having to migrate it and deal with weird issues. Instead, having done this conversion, everything is simpler, more explicit, and free for all. I also took the opportunity to opt into new security flags in the compiler and linker so any 1337 haxxors trying to root my machine via phony mouse events/hardware are going to have a slightly harder time of it.

Aside: I set the compiler/linker flags to optimize for size (including an undocumented linker flag to actually exclude debug info), but the new executables are roughly double the size from before. Maybe it's the new anti-hacking protections? Maybe it's Maybelline?

With the addition of absolute coordinate support, MouseButtonClicker now works seamlessly in both environments: on the host operating system or within a virtual machine guest window.

That said, there are some details to be aware of.

For example, dragging the mouse out of a remote desktop window looks to the relevant OS as though the mouse was dragged to the edge of the screen and then stopped moving.

That means a click should be generated, and you might be surprised to see windows getting activated when their non-client area is clicked on.

If you're really unlucky, you'll drag the mouse out of the upper-right corner and a window will disappear as its close button is clicked (darn you, Fitts!).

It should be possible to add heuristics to prevent this sort of behavior, but it's also pretty easy to avoid once you know about it.

(Kind of like how you quickly learn to recognize inert parts of the screen where the mouse pointer can safely rest.)

The latest version of the code (with diffs to the previously published version) is available on the MouseButtonClicker GitHub project page.

32- and 64-bit binaries are available for download on the Releases page.

Pointers to a few similar tools for other operating systems/environments can be found at the bottom of the README.

If you've never experienced automatic mouse button clicking, I encourage you to try it out! There's a bit of an adjustment period to work through (and it's probably not going to appeal to everyone), but auto-clicking (a.k.a. dwell-clicking, a.k.a. hover-clicking) can be quite nice once you get the hang of it.